AIIDE 2021 - Stardust’s learning

I investigated how Stardust’s learning works, and what it learned. It’s unusual, so it was worth a close look.

In its learning file of game records for each opponent, Stardust records values for 3 keys for each game, firstDarkTemplarCompleted, pylonInOurMain, and firstMutaliskCompleted. If the event occurs in the game, the value is the frame time of the event; otherwise the value is 2147483647 (INT_MAX, the largest int value, in this C++ implementation). It also records whether the game was a win or a loss. It records the hash of the map, too, but that doesn’t seem to be used again.

summarizing the data

The class Opponent is responsible for providing the learned information to the rest of the bot. It summarizes the game records via two routines.

int minValueInPreviousGames(const std::string &key, int defaultNoData, int maxCount = INT_MAX, int minCount = 0);

If there are at least minCount games, then look through the game records, most recent first, for up to maxCount games. Look up the key for each game and return its minimum value, or the default value if there are none. This amounts to finding the earliest frame at which the event happened, or the default if it did not happen in the specified number of games.

double winLossRatio(double defaultValue, int maxCount = INT_MAX);

Look through the game records, most recent first, for up to maxCount games and return the winning ratio, or the default value if there are no games yet.

using the summarized data

Each of the 3 keys is used in exactly one place in the code. Here is where firstDarkTemplarCompleted is looked up in the PvP strategy code:

if (Opponent::winLossRatio(0.0, 200) < 0.99)

{

expectedCompletionFrame = Opponent::minValueInPreviousGames("firstDarkTemplarCompleted", 7300, 15, 10);

}

This means “If we’re rolling you absolutely flat (at least 99% wins in the last 200 games), then it doesn’t matter. Otherwise there’s some risk. In the most recent 15 games, find the earliest frame that the first enemy dark templar was (estimated to be) completed, or return frame 7300 if none.” The default frame 7300 is not the earliest a DT can emerge; they can be on the map over a thousand frames earlier. So it is not a worst-case assumption. Further code overrides the frame number if there is scouting information related to dark templar production. It attempts to build a defensive photon cannon just in time for the enemy DT’s arrival, and sometimes to get an observer.

The key pylonInOurMain is part of cannon rush defense. Stardust again checks the win ratio and again looks back 15 games with a minimum game count of 10, this time with a default of 0 if there are not enough games. It starts scouting its base 500 frames (about 21 seconds) ahead of the earliest seen enemy pylon appearing in its base, which may be never. The idea is that Stardust doesn’t waste time scouting its own base if it hasn’t seen you proxy a pylon in the last 15 games, and delays the scout if the pylon is proxied late.

The key firstMutaliskCompleted is used very similarly, to decide whether and when to defend each nexus with cannons. The goal is to get cannons in time in case mutalisks arrive without being scouted. There are simple rules to decide how many cannons at each nexus:

// Main and natural are special cases, we only get cannons there to defend against air threats

if (base == Map::getMyMain() || base == Map::getMyNatural())

{

if (enemyAirUnits > 6) return 4;

if (enemyAirThreat) return 3;

if (enemyDropThreat && BWAPI::Broodwar->getFrameCount() > 8000) return 1;

return 0;

}

// At expansions we get cannons if the enemy is not contained or has an air threat

if (!Strategist::isEnemyContained() || enemyAirUnits > 0) return 2;

if (enemyAirThreat || enemyDropThreat) return 1;

return 0;

If the firstMutaliskCompleted check says that it’s time, it sets enemyAirThreat to true and makes 3 cannons each at main and natural, and at least 1 at each other base.

the data itself

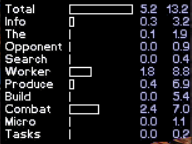

Here’s my summary of the data in Stardust’s files. The files include prepared data. I left the prepared data out; this covers only what was recorded during the tournament. The tournament was run for 157 rounds, although the official results are given after round 150. The table here is data for all 157 rounds. I don’t have a way to tell which unrecorded games were from rounds 1-150 and which were from 151-157... though I think I could guess.

n is the number of games for which a value (other than 2147483647) was recorded for the key. The values are frame numbers.

| firstDarkTemplarCompleted | pylonInOurMain | firstMutaliskCompleted | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| opponent | games | n | min | median | max | n | min | median | max | n | min | median | max |

| bananabrain | 155 | 20 | 7579 | 7897.5 | 23319 | 0 | - | - | - | 0 | - | - | - |

| dragon | 156 | 0 | - | - | - | 0 | - | - | - | 0 | - | - | - |

| steamhammer | 158 | 0 | - | - | - | 0 | - | - | - | 17 | 7188 | 8241 | 10355 |

| mcrave | 157 | 0 | - | - | - | 0 | - | - | - | 124 | 9070 | 10939 | 16146 |

| willyt | 157 | 0 | - | - | - | 0 | - | - | - | 0 | - | - | - |

| microwave | 157 | 0 | - | - | - | 0 | - | - | - | 17 | 7371 | 8534 | 11397 |

| daqin | 156 | 126 | 7533 | 7912.5 | 18154 | 2 | 2721 | 2743.5 | 2766 | 0 | - | - | - |

| freshmeat | 157 | 0 | - | - | - | 0 | - | - | - | 1 | 16801 | 16801 | 16801 |

| ualbertabot | 157 | 17 | 6230 | 6477 | 6627 | 0 | - | - | - | 0 | - | - | - |

As you might expect after deep contemplation of the nature of reality, only protoss makes dark templar or proxy pylons, and only zerg makes mutalisks. Nothing interesting was recorded for the terran opponents.

Notice that UAlbertaBot sometimes makes dark templar much earlier than the no-data 7300 frame default time; the others do not. DaQin is recorded as twice placing a proxy pylon in Stardust’s main. I didn’t think it ever did that. I guess it’s a holdover from the Locutus proxy pylon play, to trick opponents into overreacting? DaQin made DTs in most games, and McRave went mutalisks in most games. FreshMeat is recorded as having made a mutalisk (or more than one) in exactly one game, which seems unusual.